NLP key terminolgy

So one of the coolest things about these NLP packages is built-in corpus. In case you're wondering what the hell is a corpus, trust me I struggled a lot with these new words, seems like the world of NLP has their own set of technical jargon which you probably will not hear anywhere else. So when I first started working with the tutorials kept going back and forth to understand what these terms are, till I decided I've had enough and I want a repository of all the keywords.

It almost felt like going back to when I first read War and Peace and to keep track of all the characters, I kept going to the character-list, and then give up and draw family trees and put them up on my bedroom walls. I m sure my mother positively thought I was going a little crazy. Anyway, I think its time to do the same with this.

So, here goes the common keyword list:

running → run

better → good

The quick brown fox jumps over the lazy dog.

"Well, well, well," said John.

Word Sense Disambiguation

Word Sense Disambiguation

It almost felt like going back to when I first read War and Peace and to keep track of all the characters, I kept going to the character-list, and then give up and draw family trees and put them up on my bedroom walls. I m sure my mother positively thought I was going a little crazy. Anyway, I think its time to do the same with this.

So, here goes the common keyword list:

Tokenization

Tokenization is, generally, an early step in the NLP process, a step which splits longer strings of text into smaller pieces, or tokens. Larger chunks of text can be tokenized into sentences, sentences can be tokenized into words, etc. Further processing is generally performed after a piece of text has been appropriately tokenized.

Normalization

Before further processing, the text needs to be normalized. Normalization generally refers to a series of related tasks meant to put all text on a level playing field: converting all text to the same case (upper or lower), removing punctuation, expanding contractions, converting numbers to their word equivalents, and so on. Normalization puts all words on equal footing and allows processing to proceed uniformly.

Stemming

Stemming is the process of eliminating affixes (suffixed, prefixes, infixes, circumfixes) from a word in order to obtain a word stem.

Lemmatization

Lemmatization is related to stemming, differing in that lemmatization is able to capture canonical forms based on a word's lemma.

For example, stemming the word "better" would fail to return its citation form (another word for lemma); however, lemmatization would result in the following:

It should be easy to see why the implementation of a stemmer would be the less difficult feat of the two.

Corpus or Corpora

In linguistics and NLP, corpus (literally Latin for body) refers to a collection of texts. Such collections may be formed of a single language of texts, or can span multiple languages -- there are numerous reasons for which multilingual corpora (the plural of corpus) may be useful. Corpora may also consist of themed texts (historical, Biblical, etc.). Corpora are generally solely used for statistical linguistic analysis and hypothesis testing.

Named Entity Recognition (NER)

The process of locating and classifying elements in text into predefined categories such as the names of people, organizations, places, monetary values, percentages, etc.

Stop Words

Stop words are those words which are filtered out before further processing of text, since these words contribute little to overall meaning, given that they are generally the most common words in a language. For instance, "the," "and," and "a," while all required words in a particular passage, don't generally contribute greatly to one's understanding of content. As a simple example, the following Panagram is just as legible if the stop words are removed:

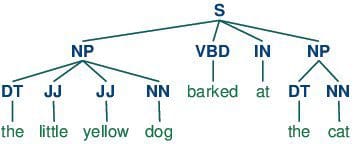

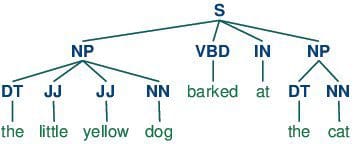

9. Parts-of-speech (POS) Tagging

POS tagging consists of assigning a category tag to the tokenized parts of a sentence. The most popular POS tagging would be identifying words as nouns, verbs, adjectives, etc.

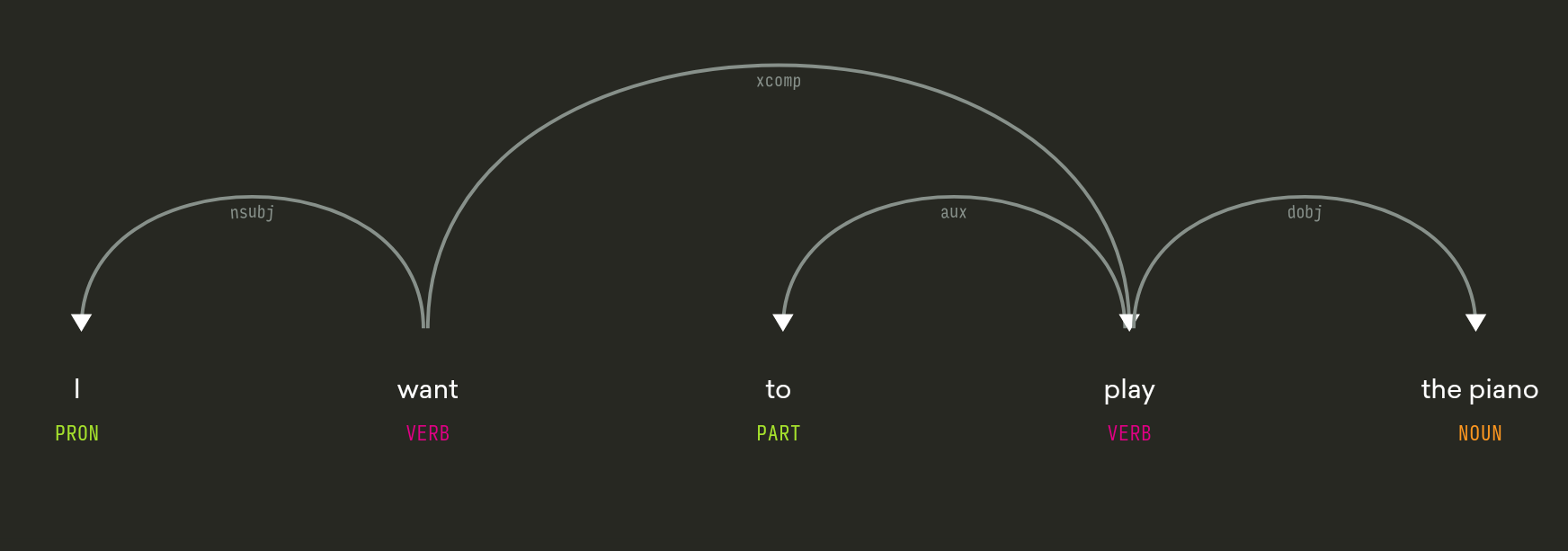

Dependency Parsing

Sometimes instead of the category (POS tag) of a word we want to know the role of that word in a specific sentence of our corpus, this is the task of dependency parsers. The objective is to obtain the dependencies or relations of words in the format of a dependency tree. The considered dependencies are in general terms subject, object, complement and modifier relations.

As an example, given the sentence “I want to play the piano” a dependency parser would produce the following tree:

|

| From Spacy |

Statistical Language Modeling

Statistical Language Modeling is the process of building a statistical language model which is meant to provide an estimate of a natural language. For a sequence of input words, the model would assign a probability to the entire sequence, which contributes to the estimated likelihood of various possible sequences. This can be especially useful for NLP applications which generate text.

Bag of Words

Bag of words is a particular representation model used to simplify the contents of a selection of text. The bag of words model omits grammar and word order, but is interested in the number of occurrences of words within the text. The ultimate representation of the text selection is that of a bag of words (bag referring to the set theory concept of multisets, which differ from simple sets).

Actual storage mechanisms for the bag of words representation can vary, but the following is a simple example using a dictionary for intuitiveness. Sample text:

"There, there," said James. "There, there."

The resulting bag of words representation as a dictionary:

{

'well': 3,

'said': 2,

'john': 1,

'there': 4,

'james': 1

}

n-grams

n-grams is another representation model for simplifying text selection contents. As opposed to the orderless representation of bag of words, n-grams modeling is interested in preserving contiguous sequences of N items from the text selection.

An example of trigram (3-gram) model of the second sentence of the above example ("There, there," said James. "There, there.") appears as a list representation below:

[

"there there said",

"there said james",

"said james there",

"james there there",

]

Regular Expressions

Regular expressions, often abbreviated regexp or regexp, are a tried and true method of concisely describing patterns of text. A regular expression is represented as a special text string itself, and is meant for developing search patterns on selections of text. Regular expressions can be thought of as an expanded set of rules beyond the wildcard characters of ? and *. Though often cited as frustrating to learn, regular expressions are incredibly powerful text searching tools.

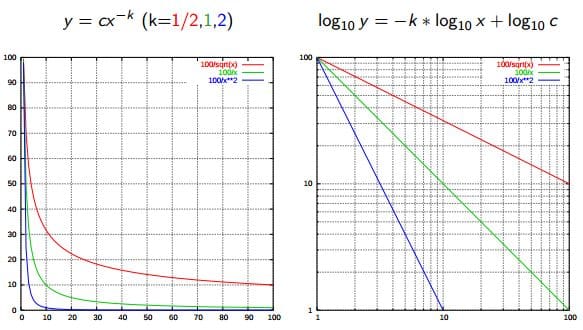

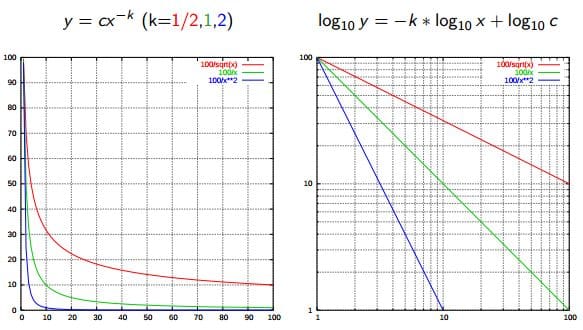

Zipf's Law

Zipf's Law is used to describe the relationship between word frequencies in document collections. If a document collection's words are ordered by frequency, and y is used to describe the number of times that the xth word appears, Zipf's observation is concisely captured as y = cx-1/2 (item frequency is inversely proportional to item rank). More generally, Wikipedia says:

Zipf's law states that given some corpus of natural language utterances, the frequency of any word is inversely proportional to its rank in the frequency table. Thus the most frequent word will occur approximately twice as often as the second most frequent word, three times as often as the third most frequent word, etc.

Word Sense Disambiguation

Word Sense Disambiguation

The ability to identify the meaning of words in context in a computational manner. A third party corpus or knowledge base, such as WordNet or Wikipedia, is often used to cross-reference entities as part of this process. A simple example being, for an algorithm to determine whether a reference to “apple” in a piece of text refers to the company or the fruit.

Latent Dirichlet Allocation (LDA)

A common topic modelling technique, LDA is based on the premise that each document or piece of text is a mixture of a small number of topics and that each word in a document is attributable to one of the topics.

Similarity Measures

There are numerous similarity measures which can be applied to NLP. What are we measuring the similarity of? Generally, strings.

- Levenshtein - the number of characters that must be deleted, inserted, or substituted in order to make a pair of strings equal

- Jaccard - the measure of overlap between 2 sets; in the case of NLP, generally, documents are sets of words

- Smith Waterman - similar to Levenshtein, but with costs assigned to substitution, insertion, and deletion

Syntactic Analysis

Also referred to as parsing, syntactic analysis is the task of analyzing strings as symbols and ensuring their conformance to an established set of grammatical rules. This step must, out of necessity, come before any further analysis which attempts to extract insight from the text -- semantic, sentiment, etc. -- treating it as something beyond symbols.

Semantic Analysis

Also known as the meaning generation, semantic analysis is interested in determining the meaning of text selections (either character or word sequences). After an input selection of text is read and parsed (analyzed syntactically), the text selection can then be interpreted for meaning. Simply put, syntactic analysis is concerned with what words a text selection was made up of, while semantic analysis wants to know what the collection of words actually means. The topic of semantic analysis is both broad and deep, with a wide variety of tools and techniques at the researcher's disposal.

Sentiment Analysis

Sentiment analysis is the process of evaluating and determining the sentiment captured in a selection of text, with sentiment defined as feeling or emotion. This sentiment can be simply positive (happy), negative (sad or angry), or neutral, or can be some more precise measurement along a scale, with neutral in the middle, and positive and negative increasing in either direction.

Information Retrieval

Information retrieval is the process of accessing and retrieving the most appropriate information from text based on a particular query, using context-based indexing or metadata. One of the most famous examples of information retrieval would be Google Search.

Some other very informative and detailed glossaries I found are:

- http://language.worldofcomputing.net/category/nlp-glossary

- http://www.cse.unsw.edu.au/~billw/nlpdict.html

- http://www.cs.bham.ac.uk/~pxc/nlp/nlpgloss.html

- https://www.searchtechnologies.com/blog/natural-language-processing-techniques

Comments

Post a Comment